This article explains how to enable and configure Discourse's AI Search, AI Translation, and AI Related Topics features, which are part of the Discourse AI plugin. This guide is based on the official tutorial and our practical implementation, enabling AI features for a Chinese Discourse site.

Demo Site: https://bbs.eeclub.top/

This is a Discourse forum I set up, with the Discourse AI plugin enabled. It supports multiple languages. After posting, content is automatically translated into various languages using AI. New user posts are automatically reviewed by AI to reduce spam.

- Series on Website Setup: https://blog.zeruns.com/category/web/

- Tutorial: Setting up a PHP website with Rainyun RCA Cloud Application (K8s-based): https://blog.zeruns.com/archives/869.html

- Tutorial: Setting up a Flarum Forum Website from Scratch: https://blog.zeruns.com/archives/866.html

I will publish a Discourse forum setup tutorial soon.

Prerequisites

The AI API used in this article is from SiliconFlow. Registering via my link grants 20 million Tokens (approx. 14 CNY).

- Invitation Registration Link: https://cloud.siliconflow.cn/i/hSviAP2x

- Invitation Code: hSviAP2x

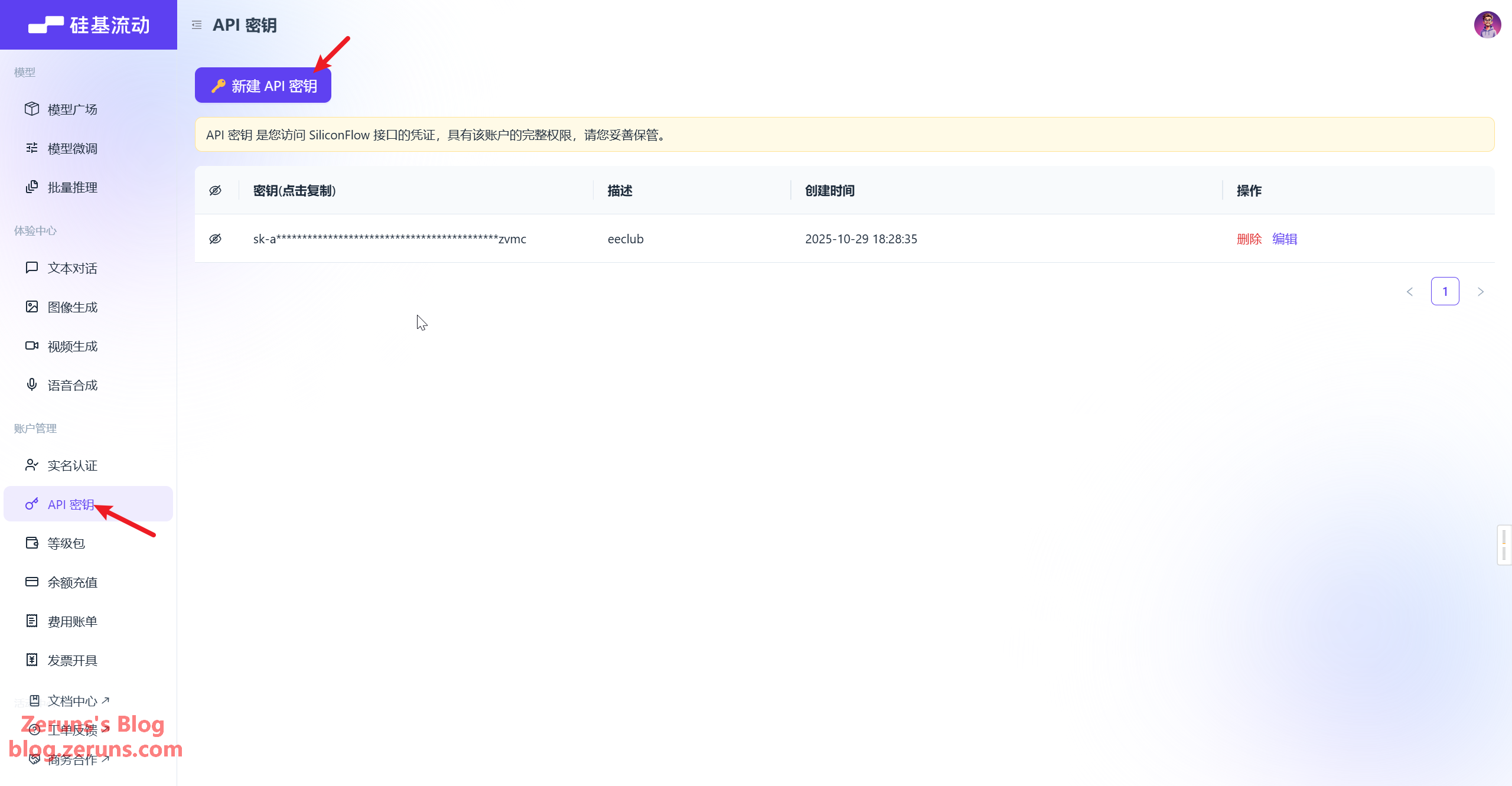

After registering a SiliconFlow account, create an API Key: Go to "API Keys" in the left sidebar → "Create API Key" → Copy the sk-xxxxxxxxxx key.

Note these two common endpoints (will be used later):

- LLM Chat:

https://api.siliconflow.cn/v1/chat/completions - Embedding:

https://api.siliconflow.cn/v1/embeddings

SiliconFlow is compatible with the OpenAI format and can directly use the OpenAI configuration page of the official AI plugin.

Discourse AI Plugin Introduction

Discourse AI is an AI assistant focused on community management. Its core value lies in saving operational time, ensuring community safety and order, while enhancing user engagement and providing management insights.

1. Moderation & Management

- Automated Moderation: Accurately detects toxic content, flags NSFW posts, and offers 99% accuracy spam filtering. Enable with one click and fine-tune flexibly.

- Dedicated AI Assistant: Supports custom system prompts and parameters. Can search the forum, access the web, and retrieve uploaded documents to serve users via chat/private messages.

- Utility Toolkit: Built-in features for proofreading, translation, and content optimization. Can generate summaries, titles, smart dates, etc.

2. Engagement & Discovery

- Semantic Search: Goes beyond keyword matching to find contextually relevant content accurately, improving search efficiency.

- Related Topic Recommendations: Suggests associated discussions at the end of topics based on deep semantic similarity analysis, promoting continued interaction.

- Quick Summarization: Condenses the core information of long conversations, helping users catch up quickly and reducing information lag.

3. Insights & Analysis

- Community Sentiment Monitoring: Analyzes discussion content for sentiment and emotional scores, capturing user attitude trends.

- Automated Reporting: Generates reports on forum activity, hot discussions, user behavior, etc., aiding management decisions.

- AI Usage Monitoring: Tracks token consumption and request volume for different models and functions, providing clear cost and usage oversight.

4. Data Security & Flexibility

- Data Ownership: AI data is stored alongside community content. Users permanently own their data.

- Privacy Protection: Uses open-source weight LLMs; user data is not used for model training, ensuring content security and control.

- Multi-Provider Support: Choose from 10+ AI service providers like OpenAI, Anthropic, Microsoft Azure, etc., and adapt to custom models.

Configuring the LLM (Large Language Model)

What is an LLM Model?: The LLM (Large Language Model) is the "brain" behind AI features, responsible for understanding natural language and generating responses (e.g., translation results, search summaries). SiliconFlow provides various LLM models compatible with the OpenAI API.

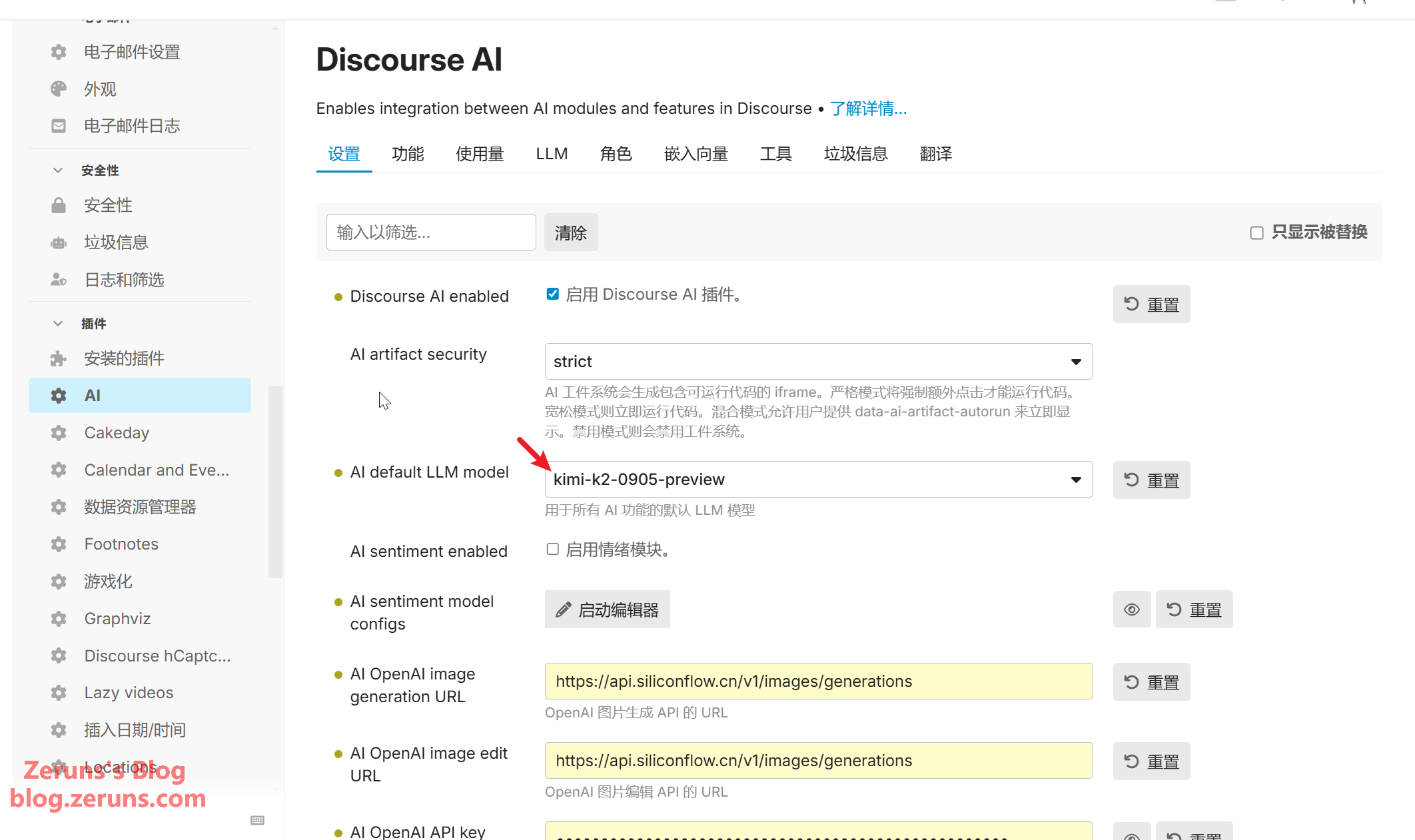

Enable the Discourse AI plugin in the Discourse Admin Panel. You must enable the plugin first for the LLM model settings to appear.

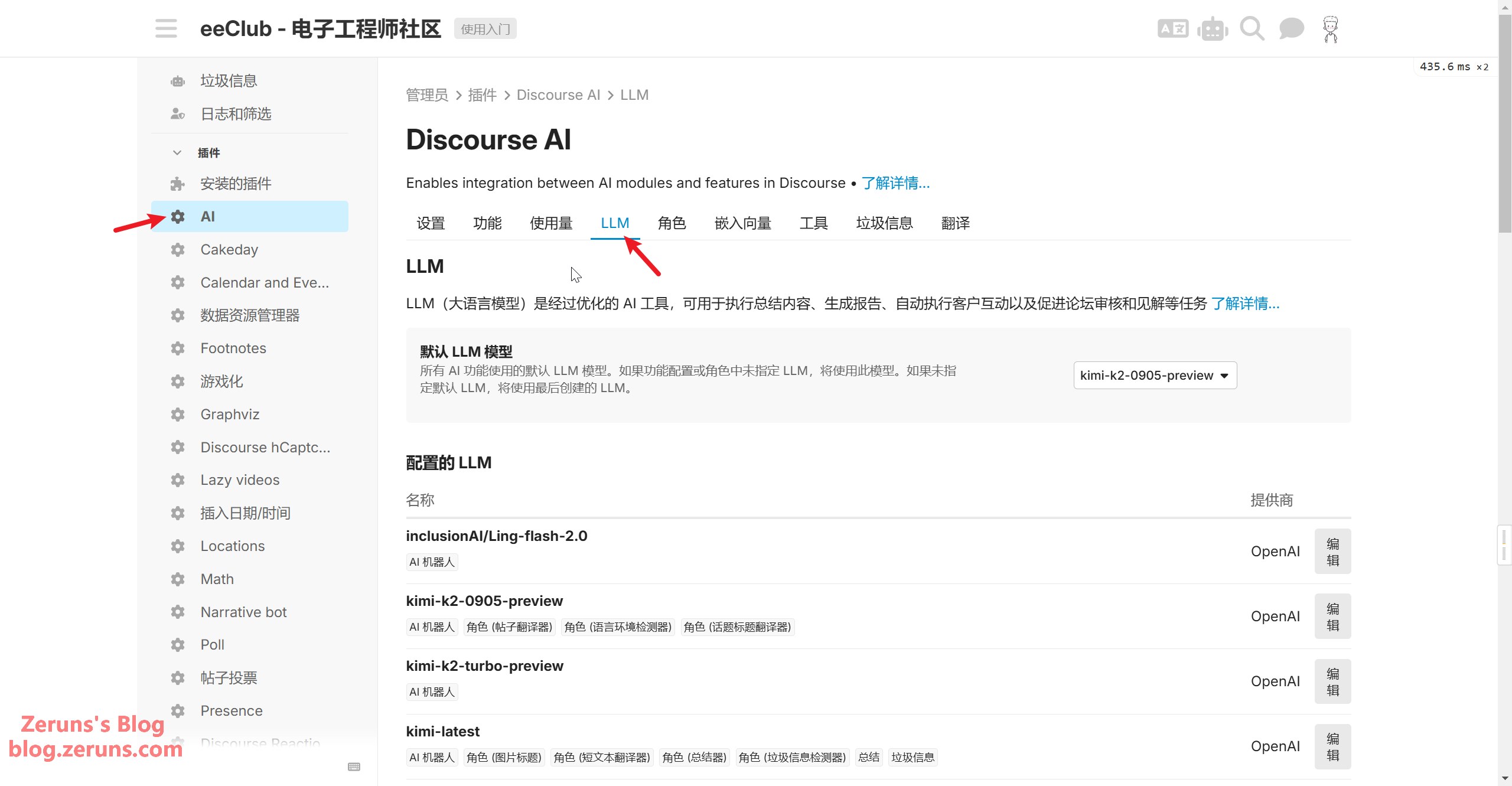

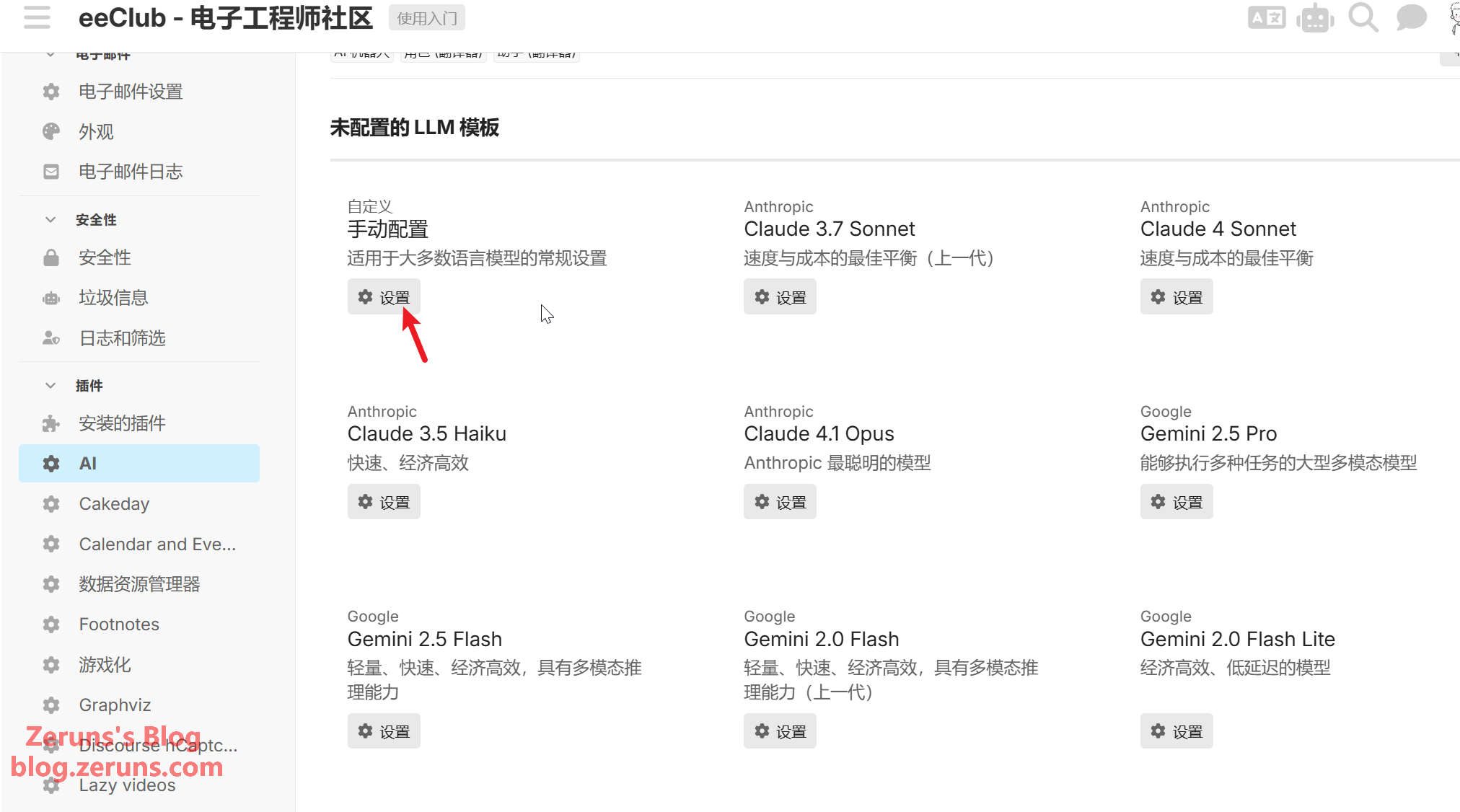

Navigate to the Settings page of the AI plugin, click LLM, scroll down to Unconfigured LLM Templates, and click the Configure button under Custom - Manual Configuration.

- Provider: Select

OpenAI. - URL of the service hosting the model: Enter the LLM API endpoint mentioned above:

https://api.siliconflow.cn/v1/chat/completions(If SiliconFlow's API address changes, please check their developer documentation for the updated address). - API Key for the service hosting the model: Paste the copied API Key.

- Model Name: Choose a name for your reference.

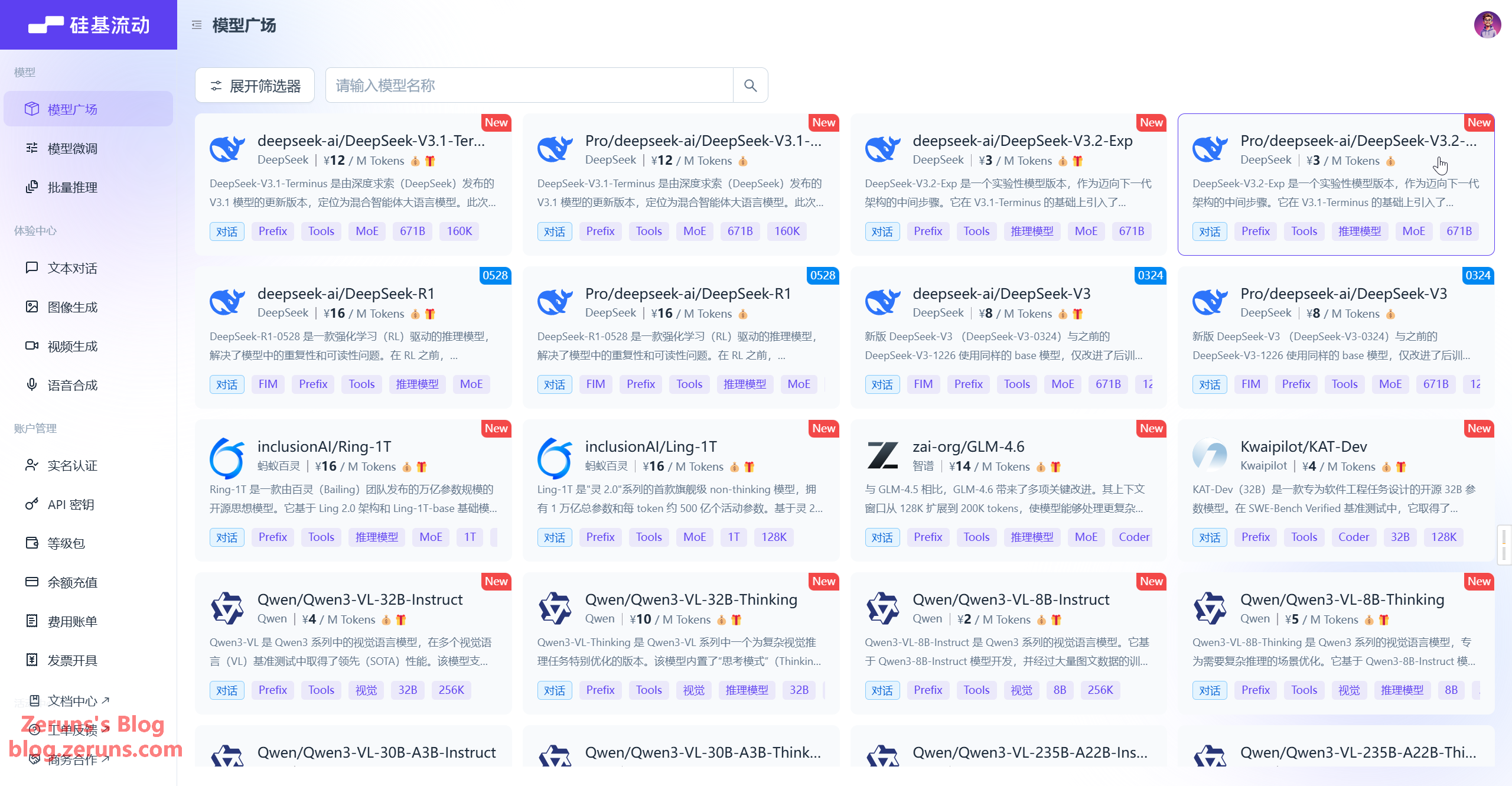

- Model ID: Go to SiliconFlow's Model Hub, select a model, and copy its ID (Note: Some models cannot use the free credits). Here, I selected

Pro/deepseek-ai/DeepSeek-V3.2-Exp.

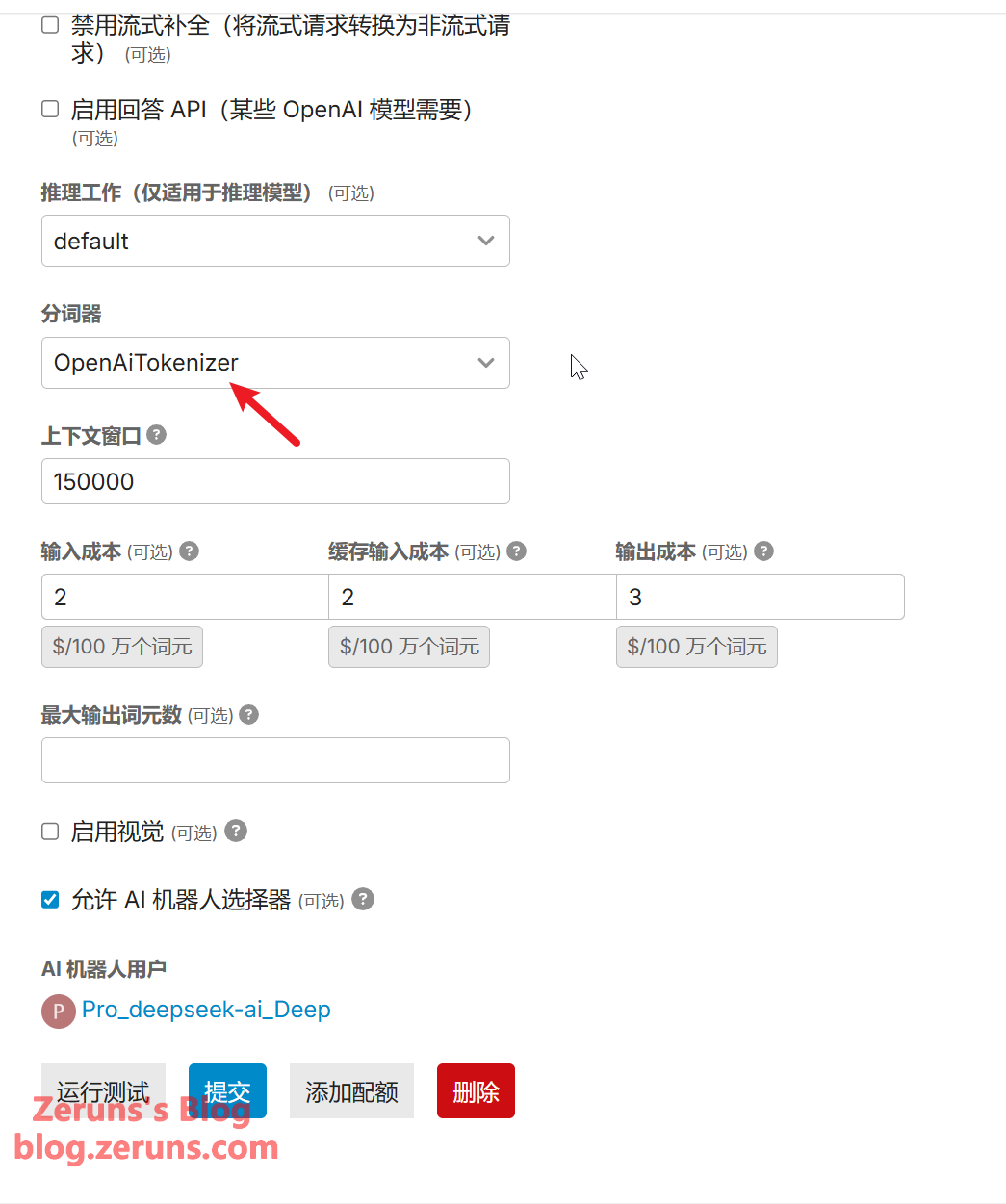

- Tokenizer: Generally, select

OpenAiTokenizer. - Context Window: Check the model's documentation for this value. For the model I selected, it's 160K, so enter

160000.

After configuring, click Submit, then click Run Test to check for any issues.

You can repeat the steps above to add multiple different models or AI service providers.

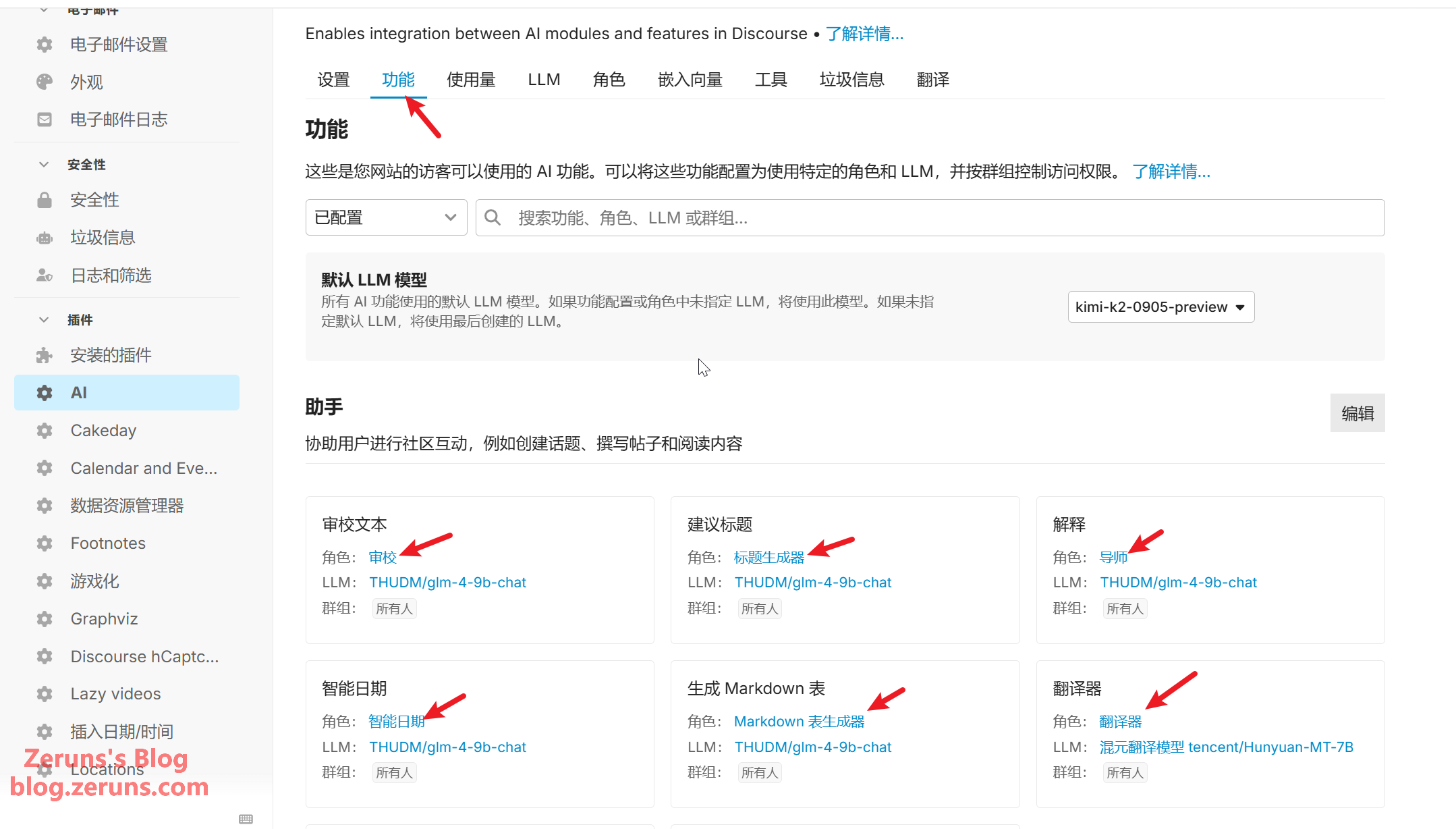

In the feature settings, you can assign different models to different functions. Simpler functions can be assigned a dedicated free model.

Configuring the Embedding Model

What is an Embedding Model?: The Embedding model converts text into computer-understandable "semantic vectors." It is core to AI Search and Related Topic recommendations (e.g., recognizing "Discourse email configuration" and "how to set up Discourse email notifications" share the same semantic meaning).

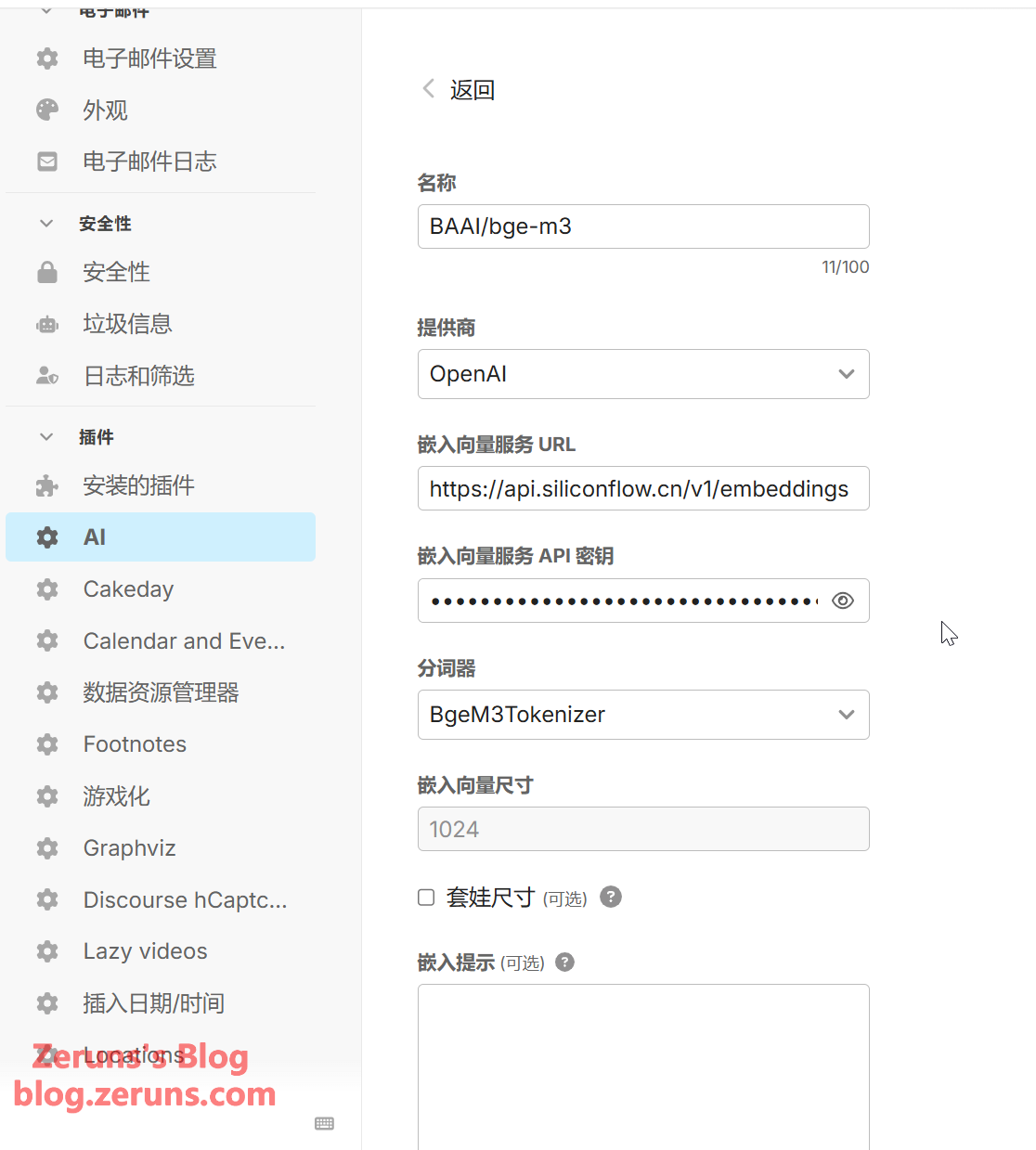

Click Embeddings → New Embedding

- Provider: Select

OpenAI. - Embedding Service URL: Enter the Embedding API endpoint mentioned above:

https://api.siliconflow.cn/v1/embeddings(If SiliconFlow's API address changes, please check their developer documentation for the updated address). - Embedding Service API Key: Paste the copied API Key.

- Model Name: Choose a name for your reference.

- Tokenizer: Select

BgeM3Tokenizer. - Model ID: Enter

BAAI/bge-m3. This model is free on SiliconFlow. - Distance Function: Select

Negative Inner Product. - Sequence Length: Enter

8000.

After configuring, click Save, then click Run Test to check for any issues.

AI Feature Settings

On the AI plugin settings page, select a model as the default in the AI default LLM model setting.

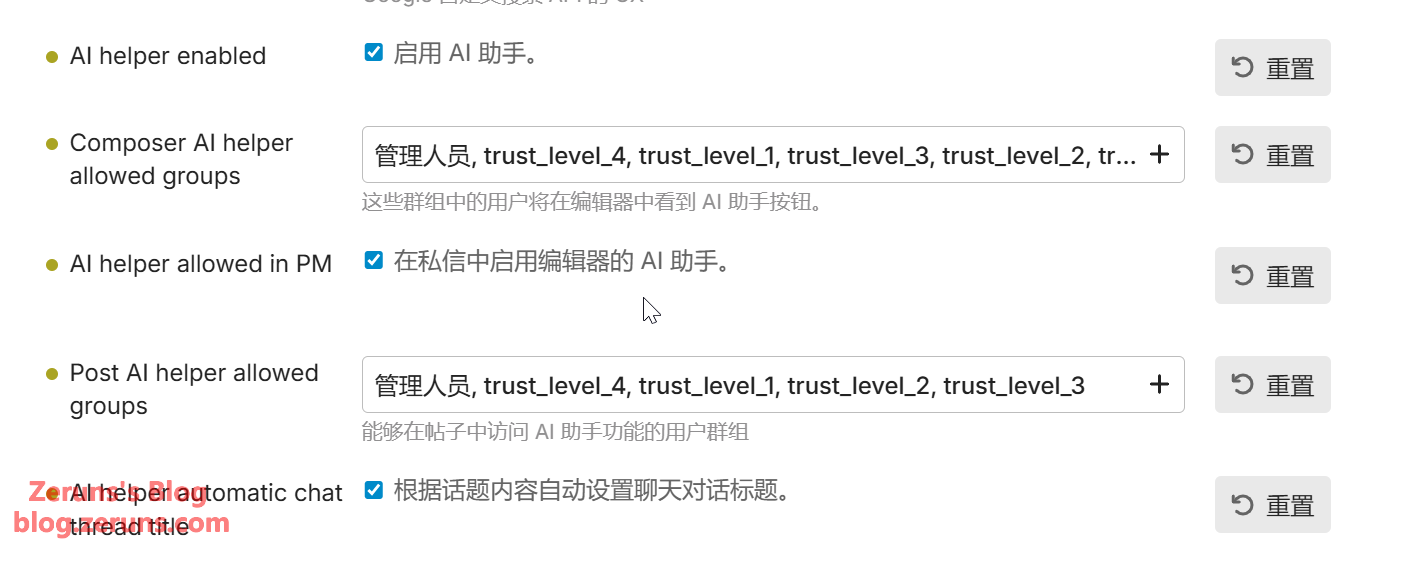

Scroll down to the AI helper enabled setting to enable the AI Assistant. Below, you can specify which user groups have permission to use it. This AI Assistant appears in the post composer and aids editing with features like translating content, proofreading text, generating Markdown tables, creating titles, etc.

Scroll down to the AI embeddings enabled setting to enable embeddings. For AI embeddings selected model, select the BAAI/bge-m3 model added earlier.

Scroll down to the AI summarization enabled setting to enable the summarization feature, which can generate summaries for topics (posts).

There are various other AI feature settings below that I won't detail one by one; feel free to explore them.

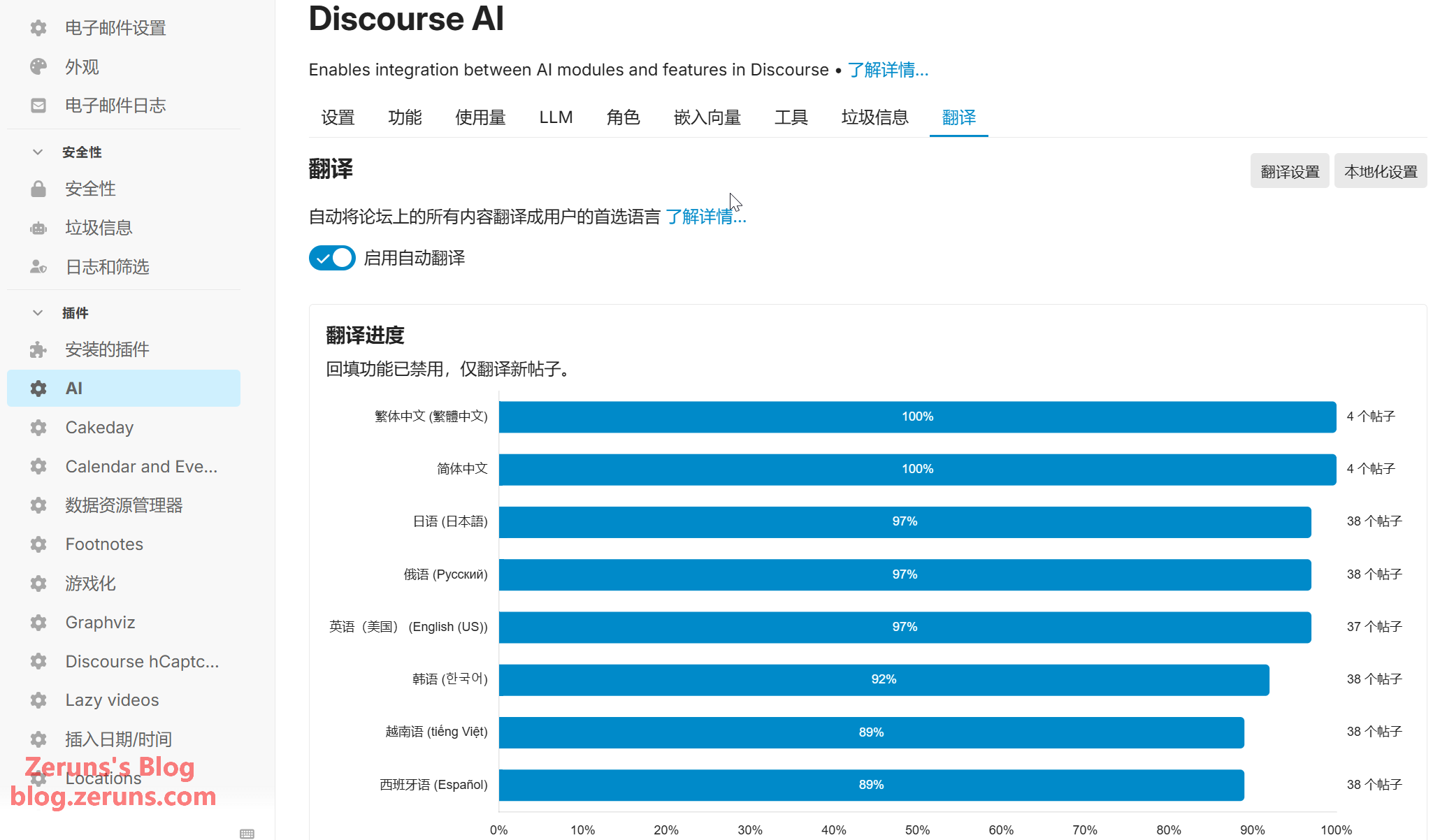

There's also the AI Translation feature, which automatically translates all forum content into the user's preferred language.

Recommended Reading

- Cost-Effective and Cheap VPS/Cloud Server Recommendations: https://blog.vpszj.cn/archives/41.html

- Minecraft Server Setup Tutorial: https://blog.zeruns.com/tag/mc/

- Hiking at Shenzhen Bijia Mountain & Photos of Shenzhen Sunset/Night Views: https://blog.zeruns.com/archives/916.html

- Teardown Analysis of DJI Mini 2 Drone: https://blog.zeruns.com/archives/912.html

- Quick Review of BenQ RD280U Monitor: A Professional Programming Monitor, 3:2 Aspect Ratio + 28-inch 4K Resolution: https://blog.zeruns.com/archives/915.html

- 【Open Source】24V3A Flyback Switching Power Supply (Based on UC3842, includes circuit and transformer parameter calculations): https://blog.zeruns.com/archives/910.html

Comment Section